Generative AI is advancing rapidly and evaluating it is proving to be a major challenge, but also an opportunity to create feedback loops that help models learn. At the Wallenberg Scientific Forum 2025 (WASF), held in Rånäs, Sweden, leading researchers and industry experts came together to tackle the future of generative AI evaluation. Their mission: to develop smarter, more human-centered methods for assessing models that generate text, images, sound and video.

“If we want safe, efficient, and high-quality systems that are free from unwanted bias, evaluation is a key tool,” says Johanna Björklund, professor at Umeå University and one of the organizers of the forum.

Deep generative models have transformed AI through their ability to replicate complex patterns in multimodal data, combining, for example, text, images, and sound. But evaluating these models poses a challenge. Unlike traditional systems, generative models rarely produce a single “correct” output. Standard metrics often fall short and human feedback is essential. While evaluations involving people require more resources, they allow models to be optimized based on human preferences. This enables trained systems to exceed the quality of their original training data, marking a paradigm shift in how AI is developed.

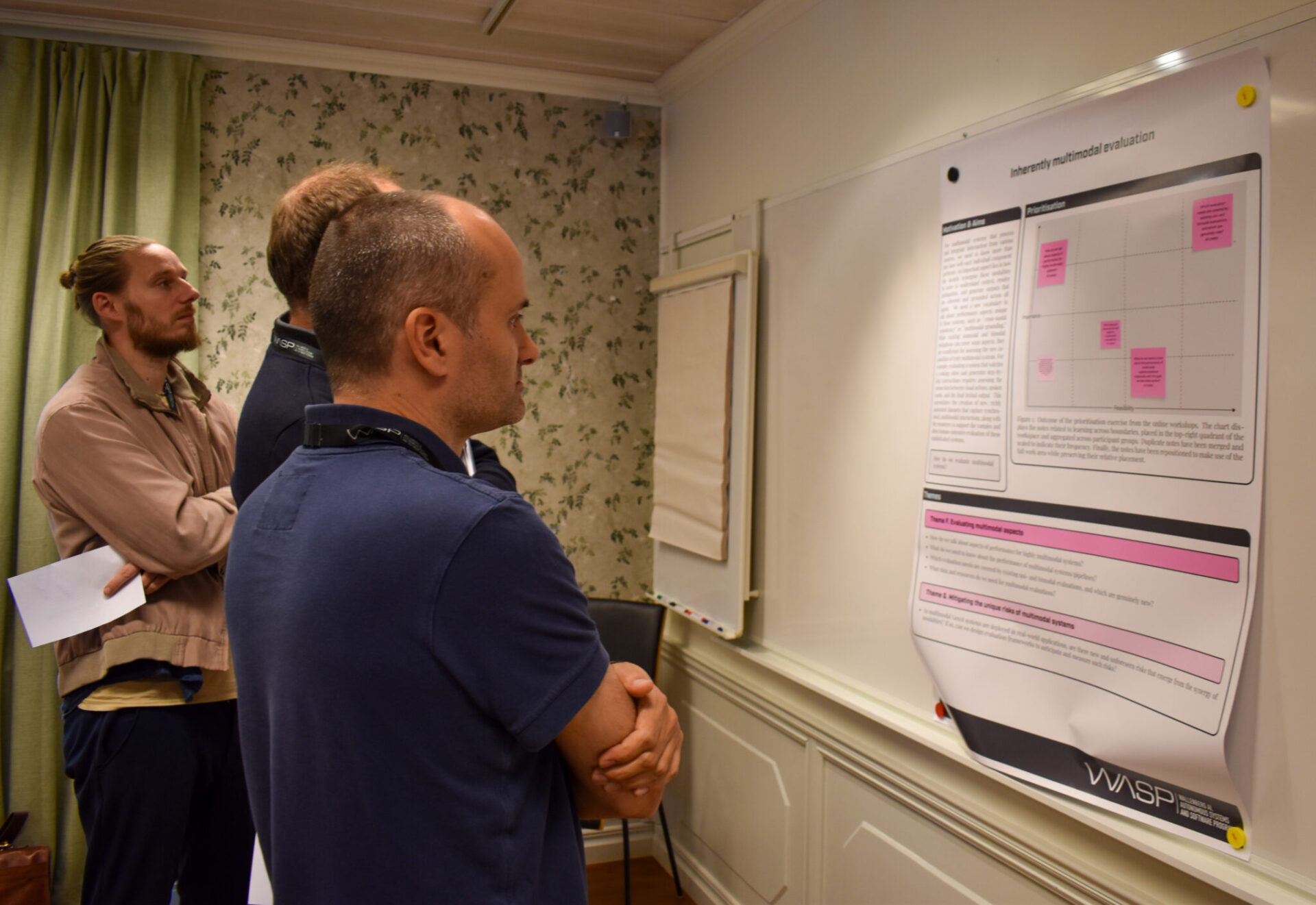

To tackle this challenge, the forum adopted the double diamond method to identify concrete activities to strengthen the quality and status of generative model evaluation.

“The forum began weeks in advance,” explained Gustav Eje Henter, professor at KTH Royal Institute of Technology and one of the organizers of the forum. “We launched surveys and digital workshops to surface key challenges. Once on site, participants formed interest-based groups and collaborated intensively over several days. It all culminated in a series of presentations where each team shared insights and proposed actionable initiatives.”

Participants from all over the world

The forum drew a diverse group of participants from around the world – the most distant guest travelled in from Tokyo. Representatives from Meta, the University of Edinburgh, The Allen Institute, Hugging Face, the University of Amsterdam, NICT, LMU Munich, Carnegie Mellon University, and others brought a wide range of perspectives. Attendees spanned all career stages, from PhD students to senior professors, and covered a broad spectrum of modalities and focus areas, from training and evaluating systems to deploying them in real-world applications.

Laying the groundwork for unified AI evaluation

The forum produced several key outcomes. Participants agreed on the need for a shared terminology to facilitate cross-disciplinary collaboration. They also identified the importance of a manual for multimodal evaluation, dynamic tools to track scientific literature, and digital repositories for sharing datasets and benchmarks. The event concluded with a one-hour presentation session, where each group shared its findings, now forming the foundation of a forthcoming position paper. Among the central questions explored were: how to raise the profile of evaluation, identify the right evaluation targets, build better benchmarks, assess multimodal aspects, mitigate the unique risks of multimodal systems, unify evaluation practices across disciplines, and move beyond the purely quantitative metrics that often dominate machine learning research.

“We are building a long-term effort to improve how generative AI is evaluated, both in academia and beyond,” says Gustav. “The energy and commitment at WASF 2025 exceeded our expectations and we’re very excited to see where this leads.”

About Wallenberg Scientific Forum (WASF)

WASF is an invitation-only, collaborative forum supported by WASP, designed to bring together leading researchers and practitioners to address foundational challenges in AI. The 2025 edition was themed Measuring What Matters: Evaluation as a Driver of Generative AI. Read more.

Next year’s WASF is in spring 2026 and will be organized by Luc De Raedt, Wallenberg Guest Professor in Computer Science and Artificial Intelligence, Örebro University, and Professor of Computer Science, KU Leuven. The theme is Foundations of NeuroSymbolic Artificial Intelligence.

Published: November 19th, 2025

[addtoany]