For Kathlén Kohn, WASP Fellow at KTH Royal Institute of Technology in Stockholm, mathematics is not just a tool to solve problems. It is a way to connect diverse disciplines, including machine learning, computer vision, physics, and even music. Her recent work centers on the field of neuroalgebraic geometry, which brings algebraic geometry into the study of neural networks.

The goal of Kathlén’s research is to better understand one of the most pressing questions in artificial intelligence and deep learning theory: why do neural networks work as well as they do?

Neural networks are made up of layers of nodes processing information called neurons. The best neural network is one that gives a good solution to a problem in a way that is fast, efficient, and stable.

“To design networks is science, engineering and art,” says Kathlén. “But right now, the practical design of neural networks is often more art than science.”

Currently, neural networks are a hot area of development. “There are thousands of researchers and engineers trying different network architectures every day,” she says. “But there’s no theory that tells you, ‘Here is the optimal network for this problem.’”

Kathlén and her collaborators are working to change that by analyzing the geometry of the space of functions that a network can represent. She leverages powerful tools from algebraic geometry, a field that studies solutions to systems of polynomial equations and their geometric properties. When applied to neural networks, this perspective offers insights into how the architecture of a network affects the shape of its function space.

In an ideal world, engineers building a neural network would have the mathematical equivalent to a catalog of design choices, Kathlén explains. Engineers could then choose the right shapes for architecture to produce a network ready to solve a specific problem.

Progress by neuroalgebraic geometry

“Some things can only be proved using algebraic geometry,” Kathlén notes.

One example is implicit bias, a phenomenon where networks tend to favor certain types of solutions even without explicit instructions. Kathlén’s team has shown that this bias is caused by singularities in the function space. A singularity is a sharp edge within the function space, like the edge created when you fold a piece of paper.

These edges act like attractors. Depending on the type of singularity, they can slow down training or push the network toward simpler solutions. “We’ve proven that some forms of implicit bias in training come from these singularities,” she explains.

This effect is well-known in practice. Sometimes network training yields networks with many dead neurons—neurons that do not activate. Removing such neurons, a process known as pruning, has been shown to make networks smaller, faster, and more energy efficient.

“Our theoretical work can now predict when and why this implicit bias effect happens,” says Kathlén. This is just one of the ways understanding machine learning can lead to improved outcomes.

The research on neural network theory includes many mathematical approaches. Optimization, differential equations, analysis, statistics, and combinatorics such as graph theory, all contribute. Kathlén argues that no single mathematical framework is sufficient to understand the mysteries of deep learning.

“You literally need all the parts of math,” she says, “including algebraic geometry.” She and her team coined the term Neuroalgebraic Geometry for the integration of the latter into deep learning theory.

Currently, Kathlén is looking into all possible ways of encoding symmetries into neural networks to theoretically analyze which of those ways improve network performance.

Bridging pure and applied mathematics

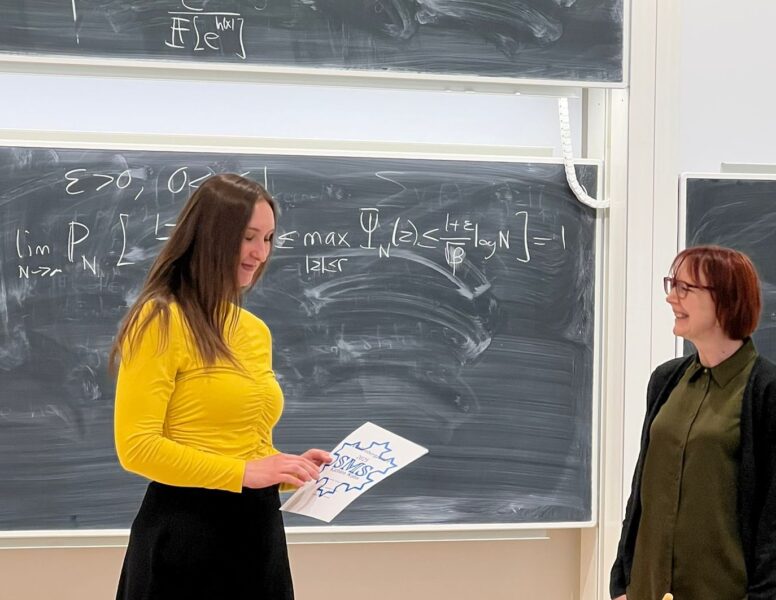

Since joining KTH in 2019, Kathlén has found a unique platform for blending pure and applied maths.

“When I saw the WASP positions advertised at KTH and Chalmers, I was so excited. I had been searching for a place where pure and applied mathematics could meet,” says Kathlén, acknowledging that mathematics as a discipline is often separated into pure maths on the one hand and applied maths on the other. As she was searching for the right academic home, “many institutions saw me as either too pure or too applied.”

Her position—and her affiliation with WASP—has also expanded her academic network. WASP has provided workshops, conferences, and opportunities for cross-institutional collaborations.

“You meet people you might not otherwise cross paths with,” she says. “It makes it much easier to integrate into the research landscape in Sweden. Even outside Sweden, WASP is highly recognized in areas like machine learning and signal processing, enhancing the visibility of WASP-affiliated researchers.”

When there are new hires or projects within WASP, Kathlén says it is much easier and faster to find out who the new faces are and what they study than if they were not a part of WASP. “This is because WASP provides a sense of community,” she explains.

A self-proclaimed “super nerd”

Kathlén says her most satisfying moments come from discovering unexpected links between distant fields. “Often, those insights come not from areas I specialize in, but from seeing two other areas from an outsider’s perspective.”

Her research has, for example, uncovered a dictionary between maximum likelihood estimation and invariant theory, and a surprising bridge between scattering amplitudes in particle physics and barycentric coordinates.

Kathlén’s interdisciplinary curiosity is also reflected in her personal interests: Playing instruments, carpentry, climbing, acrobatics and more. She calls herself a “super nerd” with a collection of degrees in mathematics and computer science.

Her interest in acrobatics has led her to the discovery that being upside down can be helpful for research: “All the blood rushes to my head. It helps with math!”

Perhaps the complete mathematical toolkit of neuroalgebraic geometry is only a few more handstands away for Kathlén.

Published: August 19th, 2025

[addtoany]